A fast nomic embedding generator

Introduction

In this article I explain how to create a simple python web service to calculate text embeddings using the nomic-embed-text model, and how to consume it from PHP code.

The header image is a representation of the nomic vector space.

The full code of this post and more, can be found here.

A CSV file with the text of 25'000 Wikipedia articles and their nomic text embedding can be downloaded here (⚠ 196 Mb).

Motivation

I wanted to play with the

Simple English Wikipedia dataset

hosted by OpenAI (~700MB zipped, 1.7GB CSV file) in a PHP project using LLPhant. The

dataset contains 25'000 wikipedia articles with their text embeddings. However, the embeddings in the file were calculated

using OpenAI's embedding models, and for my tests, I wanted to use only self-hosted LLMs. So I decided to recalculate the embeddings

using the nomic-embed-text encoder as it is distributed under the Apache License.

My first approach was to use ollama or localai to run the model. However, the performance was quite poor—overnight, my computer was only able to generate embeddings for a few hundred articles.

I needed a faster solution...

What's the point with these embeddings?

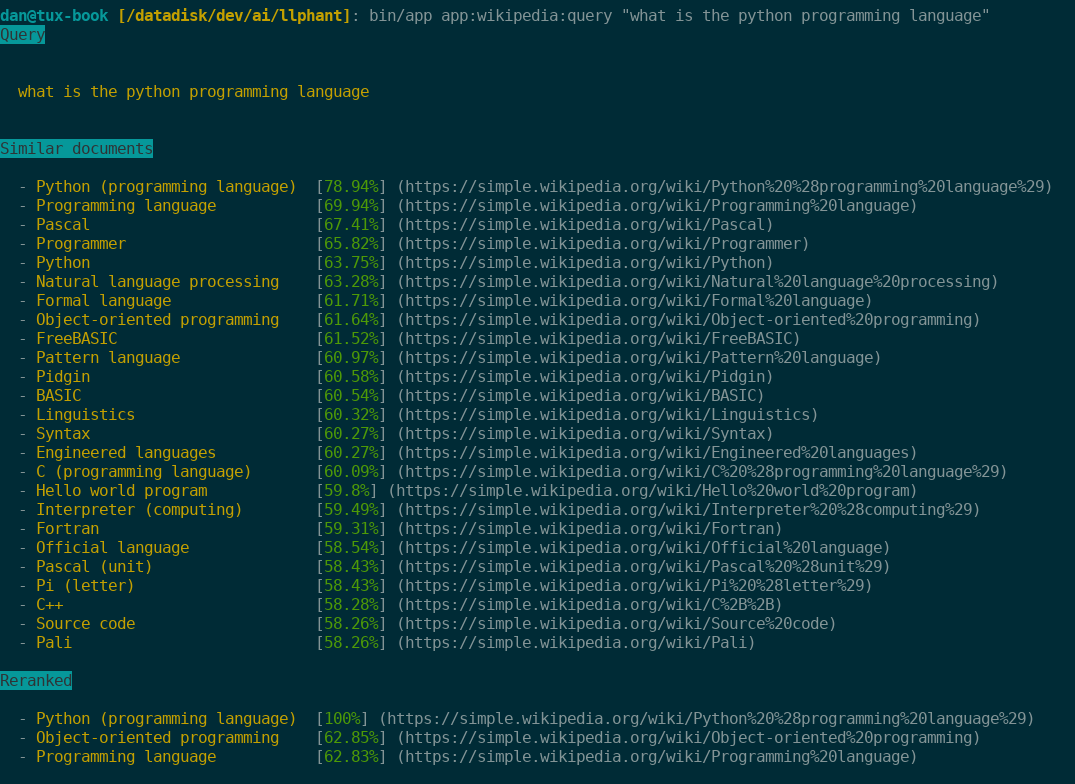

When you have the embeddings of all the Wikipedia articles, it is possible to perform a similarity search on a query.

To do this, we embed the text of the query into a vector, then conduct a similarity search with the vectors in the database, and retrieve a list of documents with similar content. Using another LLM to rerank the documents, we can filter out the ones that do not correspond to the query's semantics.

The final list of documents can be fed into a question-answering LLM to provide context. This is the principle of Retrieval-Augmented Generation (RAG).

In the screenshot below, note how the article about the python serpent is retrieved but then completely filtered out by the reranking.

Calculate the embedding

With the help of the Transformers Python library, generating text embedding is very simple.

from sentence_transformers import SentenceTransformer

embedding_generator = SentenceTransformer('nomic-ai/nomic-embed-text-v1.5', trust_remote_code=True)

embeddings = embedding_generator.encode(['This is the text to embed'])

print(embeddings[0].tolist())

The first time the code runs it will download the nomic-embed-text model. This model is about 500MB, so it takes some time to

download and requires disk space.

On my machine, I encountered "heating problems" while using the GPU. It is possible to force the model to run on the CPU. By default, it will try to use the GPU first and fall back to the CPU if no GPU is available.

embedding_generator = SentenceTransformer(..., device='cpu')

A simple Python API

Flask allows to quickly create an API without much boilerplate code.

An API that responds on the /hello route and says... well... hello can be implemented as:

#!/usr/bin/env python

from flask import Flask

api = Flask(__name__)

@api.route('/hello', methods=['GET'])

def hello():

return 'Hello API', 200

if __name__ == "__main__":

api.run()

That's everything I needed.

Request Validation

Even though it’s not strictly required for such a small project, I wanted to validate the incoming requests. I used the marshmallow library, which makes it easy to validate a JSON payload against a schema.

The API expects a request in the form { "document": "the data to embed" }, so the code is simply:

from marshmallow import Schema, fields

class EmbeddingSchema(Schema):

document = fields.Str(required=True)

@api.route('/embedding', methods=['POST'])

def embed():

body = request.get_json(force=True)

schema = EmbeddingSchema()

try:

result = schema.load(body)

except ValidationError as err:

return 'Invalid request', 400

document = result['document']

Put it all togheter

The final API looks like this.

The embedding_generator service is created once at the startup (rather than in the route) because it takes time to load

and does not need to be recreated with every request. Once created, it remains in memory for the duration of the API’s runtime.

#!/usr/bin/env python

import json

from marshmallow import ValidationError, Schema, fields

from sentence_transformers import SentenceTransformer

from flask import Flask, request

class EmbeddingSchema(Schema):

document = fields.Str(required=True)

embedding_generator = SentenceTransformer("nomic-ai/nomic-embed-text-v1.5", trust_remote_code=True)

api = Flask(__name__)

def generate_error(error: str, payload: any, status: int = 400):

return json.dumps({

'error': error,

'payload': payload,

}), status

def generate_response(data: any, status: int = 200):

return json.dumps({

'data': data,

}), status

@api.route('/embedding', methods=['POST'])

def embed():

body = request.get_json(force=True)

schema = EmbeddingSchema()

try:

result = schema.load(body)

except ValidationError as err:

return generate_error('Validation failed', err.messages)

query = result['document']

if query == '':

return generate_error('No query given.')

embeddings = embedding_generator.encode([query])

return generate_response(embeddings[0].tolist())

if __name__ == "__main__":

api.run()

A little something extra

Everything works pretty straightforwardly with Python. However, to add a little extra, we can build an executable from the Python code with the help of Cython.

cython3 --embed -o api.c api.py

python_include=$(python -c "import sysconfig; print(sysconfig.get_path('include'))")

gcc -Wall api.c -o api -L$(python-config --prefix)/lib64 $(python-config --embed --ldflags) -I"$python_include"

rm api.c

Then, we can simply copy around the api executable.

Call the API from PHP

With the help of Guzzle calling the API from PHP is easy.

<?php declare(strict_types= 1);

use GuzzleHttp\Client;

$text = "this is the text to be embedded";

$client = new Client(['base_uri' => 'http://127.0.0.1:5000']);

$response = $client->post('/embedding', [

'json' => ['document' => $text],

'headers' => [

'Content-Type' => 'application/json',

],

]);

$body = $response->getBody()->getContents();

/** @var array{error:string,data:float[]} */

$result = json_decode($body, true);

echo $result['data'];

Conclusion

With this small API hosted on my computer and the model running on the CPU, I was able to embed the 25,000 Wikipedia articles in about 16 hours. In comparison, it would have taken an eternity with the initial approach.